Before joining Arms of War, it was running on a server on what you'd expect. The code was deployed via FTP, the database was installed to the VPS, and everything needed to be managed by the administrator. This is fine if you're not looking for scale, but its messy, disorganized, and can take a lot of time to figure out why one environment doesn't work compared to another environment.

One of the first things I brought on for Arms of War was Docker. Docker is a platform that allows you to create individual containers for a specific purpose. In these environments we create a file that instructs the server how to build the docker image, giving a self contained environment where everything is boxed up each with their very specific purpose. As an added bonus, we moved to using these instructions to build out our local test environments. Now, any time we're testing before deploying to our server test environment, we're able to spin up and test locally, in the same way the server would.

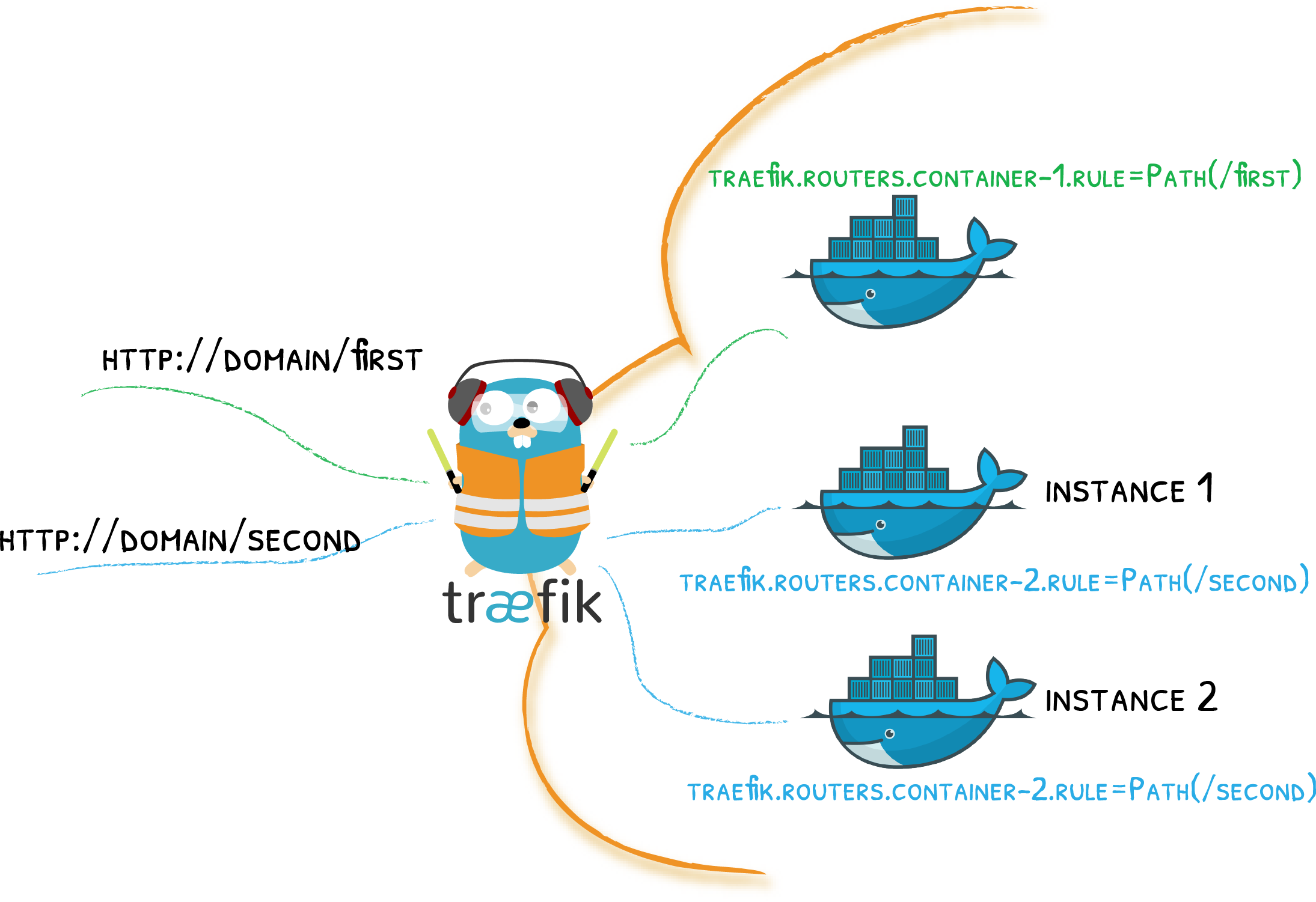

Another reason for using docker was because we wanted to be able to run more than one server/application on each VPS. One of the key drawbacks to this is that each Node Application, WebServer, Database, etc. must be hosted on its own port. Since web works on ports 80 & 443, we needed to come up with a way to route to the correct applications based on the hostname. Fortunately for us, docker has a simple and self-configurable reverse proxy service available, Traefik. Using Traefik we were able to set https://blog.arms-of-war.com to our blog site, while having the game available on https://arms-of-war.com, all running on the same server and underlying databases. This also gave an added benefit of allowing us to spin up a test server & test database using the same configurations as the live application. Easy as pie!

We've seen growing pains, and learning curves while using this configuration, however I think we've got into a good rhythm and are looking at the next steps to make configuration more seamless.

We've recently installed another service to our docker environment - WatchTower. This service will check any compiled docker containers, and update them when a new container becomes available. In our source control platform GitLab, we can create a pipeline to test and compile the docker containers each time a change is merged. Using a combination of these solution our plan is to update the test environment every time we merge a change, and get immediate feedback on the change. Additionally, going in and running the last step to move the container to the production path would allow us to deploy to all "production" servers within minutes. Not a bad flow, for a platform that needs to scale!

Update: Since originally writing this post we've trialed both kubernetes and docker swarm to scale out further. Currently we're running on a five node docker swarm environment in a model similar to what is discussed here and so far have been happy with the results and comparable simplicity.